Understanding the Evolving Landscape of Language Models

Understanding the Evolving Landscape of Language Models

Introduction

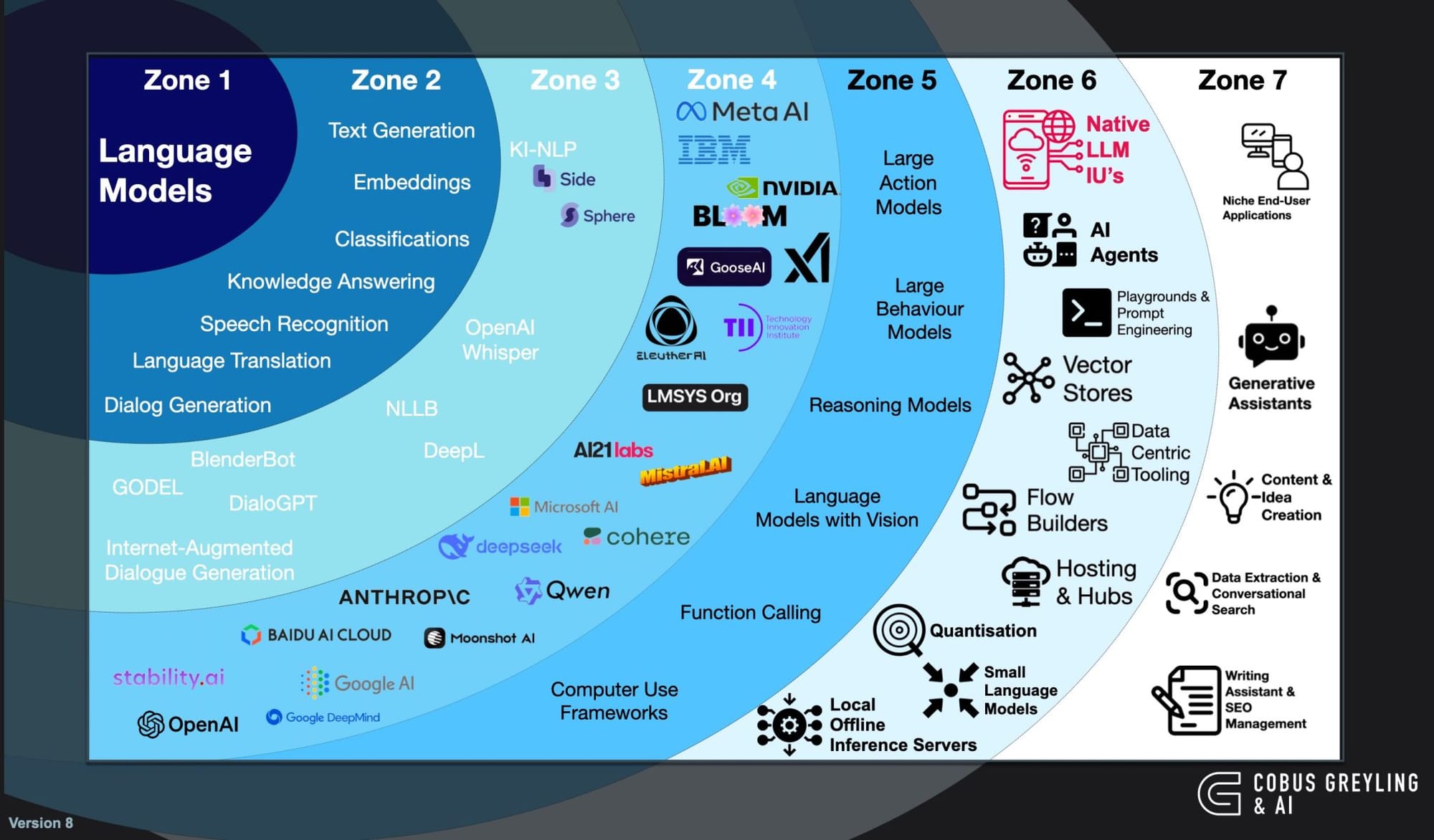

Language models have rapidly evolved, reshaping the way we interact with technology and revolutionizing industries. The image presented here divides the current ecosystem into seven zones, each representing distinct categories and functions of language models and associated technologies. Let’s explore each zone to understand the progression from foundational language models to end-user applications.

Zone 1: Language Models

Zone 1 represents the foundational layer where core language models operate. These models are responsible for essential tasks such as text generation, embeddings, classifications, knowledge answering, speech recognition, language translation, and dialog generation. Notable models include BlenderBot, DialoGPT, GODEL, and those designed for internet-augmented dialogue generation. This zone also features leading players like OpenAI, Google DeepMind, Stability AI, and others, highlighting their significant contributions to core language tasks.

Key Tools and Technologies:

- Text Generation: GPT-4, BERT, RoBERTa

- Embeddings: Word2Vec, GloVe, Sentence-BERT

- Classifications: T5, BART

- Knowledge Answering: GPT-3.5-turbo, LaMDA

- Speech Recognition: OpenAI Whisper

- Language Translation: NLLB, DeepL

- Dialog Generation: BlenderBot, DialoGPT, GODEL

- Major Players: OpenAI, Google DeepMind, Stability AI, Anthropic, Baidu AI Cloud

Zone 2: Enhanced Language Processing

Building on the foundations, Zone 2 emphasizes enhanced language processing capabilities. Here, models focus on text generation, embeddings, classifications, and more sophisticated language understanding.

Key Tools and Technologies:

- Advanced Models: DeepL, NLLB

- Speech Processing: OpenAI Whisper

- Language Augmentation: BlenderBot

- Major Players: OpenAI, Google AI, Anthropic, Cohere, Microsoft AI

Zone 3: Advanced AI Infrastructure

Zone 3 marks the transition from language-centric tasks to broader AI functionalities. It includes frameworks like KI-NLP and organizations such as LMSYS Org and AI21 Labs. These entities focus on creating robust infrastructures that integrate language models with other AI capabilities, preparing the ground for more complex interactions.

Key Tools and Technologies:

- NLP Frameworks: KI-NLP, Side, Sphere

- Platform Integrations: LMSYS Org, AI21 Labs

- Major Players: DeepSeek, Cohere, Microsoft AI

Zone 4: Specialized Large Models

As we move into Zone 4, the focus shifts to large behavior models, reasoning models, and language models with vision. Companies like Meta AI, NVIDIA, and Mistral AI are driving innovations in combining language processing with visual inputs and reasoning capabilities. These models are more context-aware, supporting applications that require higher cognitive functions.

Key Tools and Technologies:

- Large Models: Bloom, EleutherAI’s GPT-NeoX

- Vision-Language Models: CLIP, BLIP

- Major Players: Meta AI, NVIDIA, EleutherAI, TII, Mistral AI, AI21 Labs

Zone 5: Large Action and Behavior Models

Zone 5 introduces models designed for large-scale actions and behavior understanding, featuring reasoning and vision integration.

Key Tools and Technologies:

- Behavior Models: Gemini (Google DeepMind), ChatGPT

- Reasoning Models: GPT-4, Claude

- Vision Models: Flamingo (DeepMind)

- Major Players: OpenAI, Google DeepMind, Meta AI

Zone 6: Integration and Use

Zone 6 highlights how language models are integrated into practical applications, focusing on AI agents, native LLM interfaces, and data-centric tooling. Tools for vector storage, flow building, and hosting are pivotal here, making LLMs accessible and manageable at scale. This zone also features frameworks for building personalized and efficient user interactions.

Key Tools and Technologies:

- AI Agents: Auto-GPT, BabyAGI

- Data Management: ChromaDB, Weaviate

- Prompt Engineering Tools: LangChain, Flowise

- Major Players: OpenAI, Cohere, DeepMind

Zone 7: End-User Applications

Finally, Zone 7 represents the culmination of AI advancements—niche end-user applications. These applications leverage the full spectrum of language model advancements to deliver customized, user-friendly solutions.

Key Tools and Technologies:

- Generative Assistants: Jasper, Copy.ai

- Content Creation: Notion AI, Frase

- Data Extraction & Search: Perplexity.ai, Andi

- Major Players: OpenAI, Google, Microsoft, Stability AI

Conclusion

The image underscores the ongoing evolution from core language models to integrated, intelligent systems capable of real-world applications. As technology advances, the fusion of language models with vision, reasoning, and interactive capabilities marks the next frontier in AI. Understanding these zones provides insight into how AI systems are structured, developed, and deployed in various contexts.

If we rethink the logic of categorizing these tools, we could approach it from several alternative perspectives. Here are a few possible categorizations:

1. Functionality-Based Categorization:

- Core Language Functions: Text generation, embeddings, classifications, dialog generation, speech recognition, translation (e.g., GPT-4, BERT, Whisper).

- Advanced Reasoning and Behavior Models: Models with complex decision-making and contextual understanding (e.g., ChatGPT, Claude).

- Multimodal and Vision Models: Models that combine language with visual data (e.g., CLIP, Flamingo).

- Infrastructure and Frameworks: Tools that enable building or integrating AI models (e.g., KI-NLP, LangChain).

- Application Layer: User-facing applications like generative assistants, content creation tools (e.g., Jasper, Copy.ai).

2. Application Domain Categorization:

- General Purpose Models: Versatile models for text and dialogue (e.g., GPT-4, BERT).

- Conversational AI: Focused on dialogue generation and interaction (e.g., DialoGPT, BlenderBot).

- Knowledge and Reasoning Models: Advanced AI for decision-making (e.g., Claude, Bloom).

- Creative and Content Generation Tools: Used for content creation and ideation (e.g., Jasper, Notion AI).

- Data Processing and Management: Tools for vector storage, embeddings (e.g., ChromaDB, Weaviate).

3. Ecosystem-Based Categorization:

- Big Tech Platforms: OpenAI, Google DeepMind, Meta AI, Microsoft AI.

- Independent and Open Source Initiatives: EleutherAI, Stability AI, Cohere.

- Niche and Specialized Platforms: Moonshot AI, Mistral AI.

- Infrastructure Providers: AI21 Labs, LMSYS Org.

4. User Interaction and Deployment Categorization:

- Backend AI Models: Used primarily in server-based applications (e.g., GPT-4, Bloom).

- Frontend and User Interaction Models: Deployed as chatbots, voice assistants (e.g., DialoGPT, Whisper).

- Integrated AI Agents: Systems combining multiple models for complex tasks (e.g., Auto-GPT, BabyAGI).

- Standalone End-User Apps: Products directly used by non-technical users (e.g., Jasper, Perplexity.ai).

5. Contextual and Real-Time Processing Categorization:

- Real-Time Processing Models: Speech recognition and dialog systems (e.g., Whisper, DialoGPT).

- Batch Processing and Analysis Models: Text generation and classification tools (e.g., BERT, RoBERTa).

- Context-Enhanced Models: Internet-augmented dialogue generation (e.g., BlenderBot).

6. Adoption Stage Categorization:

- Emerging Technologies: Innovative models and frameworks still gaining traction (e.g., Mistral AI).

- Mainstream Implementations: Widely adopted and established models (e.g., GPT-4, Whisper).

- Experimental and Research Models: Niche applications and research-focused frameworks (e.g., KI-NLP, Sphere).

Final Thoughts:

The original categorization focuses on the evolution and application of language models, but we could alternatively focus on their functionality, domain, ecosystem, user interaction level, processing context, or adoption stage. Each approach offers a different lens to understand how these tools and technologies are positioned within the AI landscape. Let me know if any of these alternative categorizations resonate with you!

If I were to categorize these tools to align with the Google DeepMind Product Manager (Model Behavior) role, I would focus on the following dimensions:

1. Model Behavior and Interaction:

- Human-Like Interaction Models: Tools and models designed to simulate conversational behavior (e.g., ChatGPT, BlenderBot).

- Ethical and Safe Models: Models designed with fairness, transparency, and bias mitigation (e.g., Gemini, Claude).

- Contextual Understanding Models: Systems that adapt responses based on context (e.g., LaMDA, GODEL).

- Personalization and Adaptability: Models that learn from user interactions (e.g., Auto-GPT, BabyAGI).

Why?

As a PM at DeepMind focused on model behavior, it’s crucial to understand how AI models behave in various contexts, particularly how they simulate human interaction, manage context, and ensure ethical output.

2. Multi-Modal and Complex Reasoning Models:

- Vision and Language Models: Combining textual and visual data for richer responses (e.g., CLIP, Flamingo).

- Large Behavior and Action Models: AI capable of making nuanced decisions (e.g., Gemini, Claude).

- Reasoning and Cognitive Models: Models specifically designed to infer and reason (e.g., Bloom, GPT-4).

Why?

DeepMind places a strong emphasis on multi-modal AI and models that demonstrate cognitive reasoning. Categorizing by these capabilities helps identify areas where human-like reasoning is critical.

3. Infrastructure and Deployment:

- AI Ecosystem Integrators: Tools that bridge multiple models and interfaces (e.g., LangChain, Flowise).

- Hosting and Scalability: Solutions that support model deployment at scale (e.g., Vector Stores, Flow Builders).

- Local and Edge AI: Tools that support offline inference and low-latency interactions (e.g., Local Offline Inference Servers).

Why?

As a PM, understanding how models are integrated into user-centric applications and maintaining efficiency at scale is vital for product performance and user experience.

4. Real-World Application and User Engagement:

- Generative and Creative Assistants: Tools that enhance productivity and creativity (e.g., Jasper, Notion AI).

- Interactive and Adaptive Agents: AI systems that dynamically respond to user input (e.g., BabyAGI).

- End-User Personalization: Customizable models for diverse user contexts (e.g., Copy.ai).

Why?

A key aspect of model behavior is understanding how AI meets user expectations and needs in practical applications. This category focuses on user engagement and experience.

5. Research and Innovation Hubs:

- Open Research Models: Platforms focused on cutting-edge AI research (e.g., EleutherAI, AI21 Labs).

- Experimental Interfaces: Testbeds for new interaction paradigms (e.g., Sphere, Side).

- Collaborative AI Projects: Initiatives bringing together academia and industry (e.g., LMSYS Org).

Why?

To innovate at DeepMind, it’s essential to stay connected with cutting-edge research and understand where the field is heading, especially regarding model behavior and ethics.

Final Thoughts:

This categorization reflects the role’s focus on model behavior, multi-modal interactions, ethical AI practices, and user engagement. By organizing tools around behavioral impact and deployment contexts, I would demonstrate my ability to think critically about how DeepMind’s models interact with users and the world.